This tutorial will explain how two specific files, robots.txt and LLMS.txt, work together to guide different types of “bots” to the right content on your site. You’ll learn what these files are for and how Carrot takes care of these for you.

Think of it like this: your website is a house. Robots.txt is the virtual welcome mat and a list of rules for traditional search engine bots. It tells them which pages or files to explore and which parts of your website are off-limits. LLMS.txt is a detailed floor plan for a new type of visitor: an AI assistant. It provides a quick, organized summary of the most important aspects of your site and their purpose, making it easy for the AI to understand your website at a glance.

Robots.txt

The robots.txt file is a standard text file that lives at the root of every Carrot website. Its purpose is to communicate with traditional web crawlers—the bots used by search engines like Google and Bing—to tell them which pages and files they are allowed to crawl (visit and read) and which they should not.

How it works:

- Rules for Bots: The file contains rules using simple commands like

User-agent:andDisallow:. For example, a command might tell a specific search engine bot to avoid a certain folder on your site. - Controlling Visibility: By telling search engines what not to crawl, we can prevent private or unimportant pages (like admin logins or a form’s “Thank You” page) from showing up in search results, which keeps your site’s public presence clean and professional.

- Automatic for Carrot Websites: You don’t have to worry about creating or managing your robots.txt file. Carrot automatically generates and optimizes this file for your website to ensure search engines can effectively find and index your most important pages.

LLMS.txt

The llms.txt file is a new tool specifically designed to help Large Language Models (LLMs)—the AI behind tools like ChatGPT and Google Gemini—understand your website. While robots.txt tells bots where they can’t go, llms.txt tells AI which parts of your site are the most important and how the content is structured. It’s like a sitemap for AI.

This file helps AI get a quick, accurate overview of your site without having to “read” every single page. This makes it easier for AI to use your site’s information to answer user questions, potentially increasing your visibility in AI-powered search and conversational platforms.

You have two options for managing your llms.txt file:

- Automated LLMS.txt: This is the default setting and is perfect for most users. Carrot automatically creates a comprehensive llms.txt file for you. It includes key information like:

- Your site’s title and meta description.

- A list of your core pages and their descriptions.

- A list of your blog posts and their titles.

- This automated file ensures your website’s content is structured in a way that AI can easily understand and process, giving you a head start with AI visibility.

- Custom LLMS.txt: If you’re a more advanced user and want full control, you can create a custom llms.txt file. This option is available in your site settings. You can write your own content and structure to highlight specific information you want AI to focus on, such as a special report, a specific service page, or your unique selling proposition.

Where to Find Your Custom LLMs.txt Settings

You can find your robots.txt and llms.txt settings in the SEO Settings area of your Carrot website.

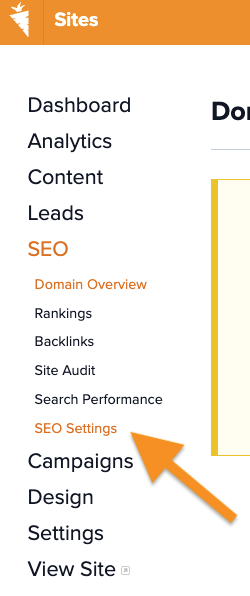

In the left side navigation of your site dashboard, in the drop-down options under SEO, click SEO settings. While logged into your Carrot account, you can also click here to navigate directly to your site’s SEO settings.

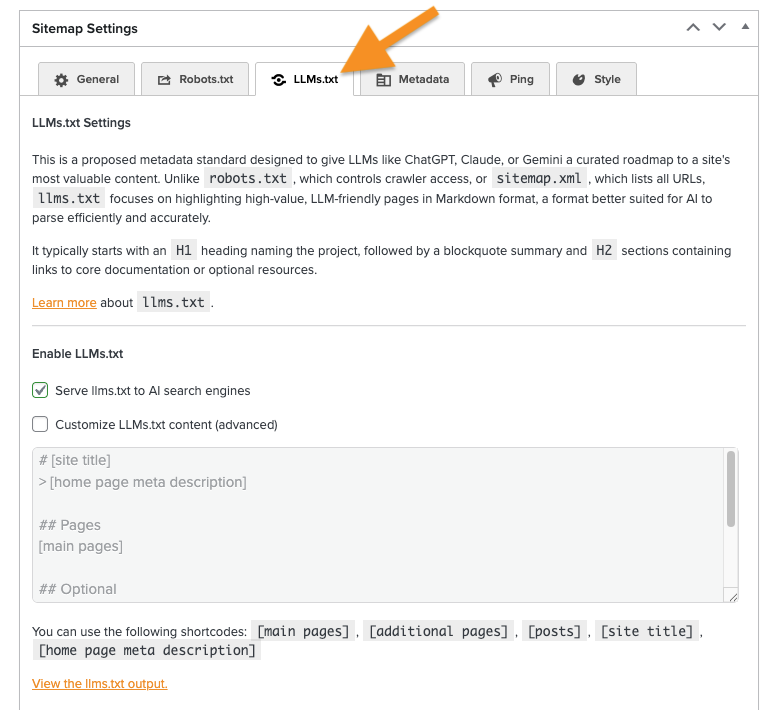

In the SEO Settings, scroll down near the bottom to the section called “Sitemap Settings” and you’ll see a tab for Robots.txt and a tab for LLMS.txt:

Under the Robots.txt settings, there should be a box that’s checked off by default for “Sitemap Hinting” to add your site map location to the robots.txt. We generally recommend leaving this enabled, but if for some reason you don’t want to include the sitemap in your robots.txt, you can uncheck the box.

Under the llms.txt settings, we’ve checked the option to serve the llms.txt to AI search engines by default. If you want to further customize the llms.txt content, you can check that box and then edit the field below it accordingly.

Watch this video to learn more about this feature:

Conclusion

By using both robots.txt and llms.txt, your Carrot website is optimized for both traditional search engines and the new wave of AI assistants.

- Robots.txt ensures your public pages are discoverable by search engines and helps you control what’s indexed.

- LLMS.txt provides a clear, structured guide for AI, helping it understand your site’s purpose and key content more efficiently.

Together, these files give your site the best chance to be found, understood, and highlighted by both search engines and AI, ultimately helping you attract more leads and grow your real estate investment business.

Learn More:

- Google Approved Sitemaps and How to Submit

- How To Use and Understand Our Search Performance (Google Search Console) Feature

- Frequently Asked Questions about SEO

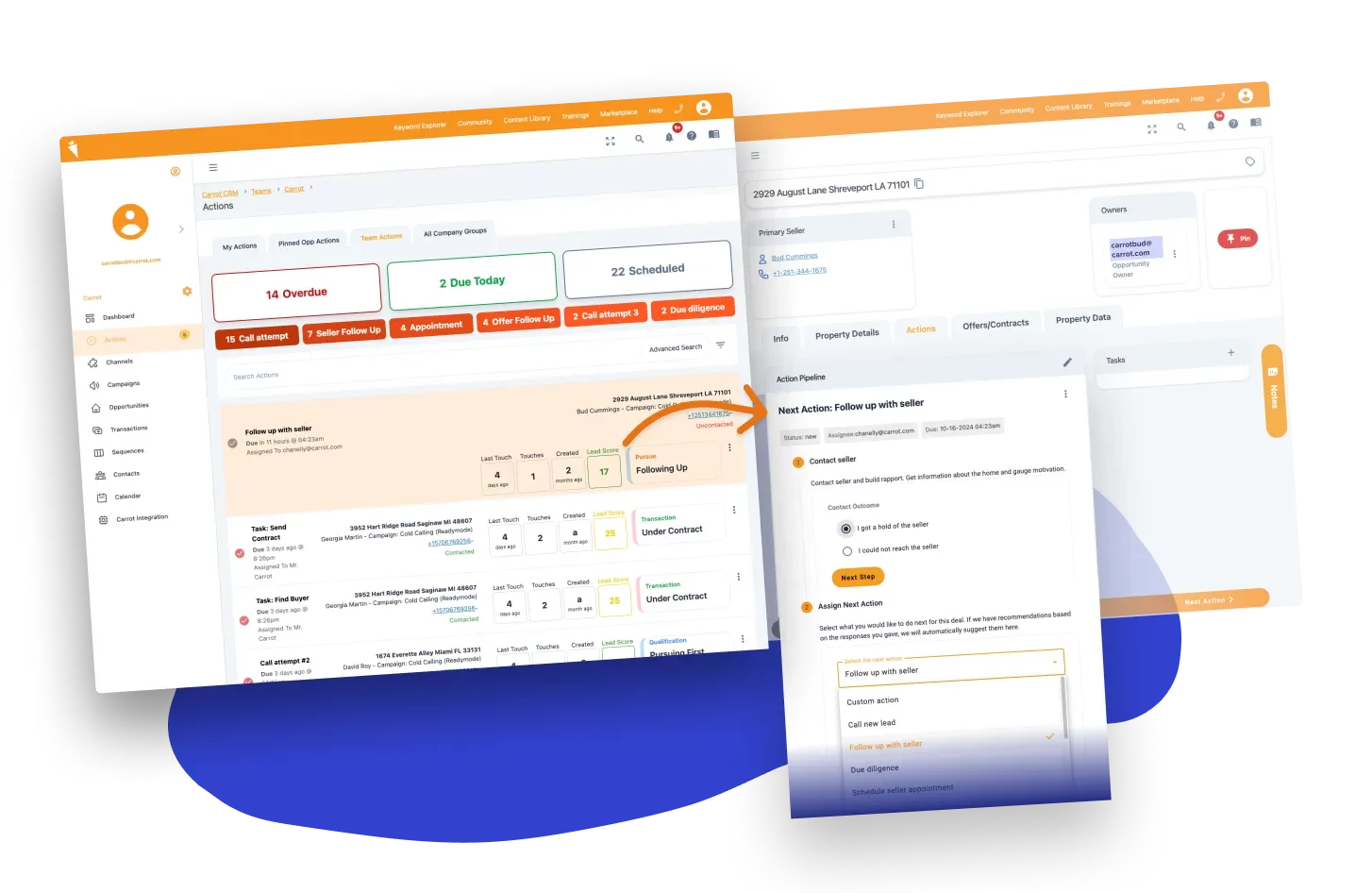

Close More Deals

with Carrot CRM

Grow your revenue and turn more leads into closed

deals with Carrot’s built-in CRM.

Premium Support

& 1:1 Strategy Calls

Members with our Premium Support Add-On can book

a 1:1 video calls for tech questions & strategic advice.